By default, Contrail vRouter runs in kernel mode. That is totally fine as long as you do not care too much about performance. Kernel mode means that IO actions require to go through the kernel and this limits the overall performance.

A “cloud friendly” solution, as opposed to SRIOV or PCIPT, is DPDK. DPDK, simply put, brings kernel mode into user space: vrouter runs in user space, leading to better performance as it no longer has to go to the kernel every time. How DPDK actually works is out of the scope of this document, there is plenty of great documentation out there on the Internet. Here, we want to focus on how DPDK fits in contrail and how we can configure it and monitor it.

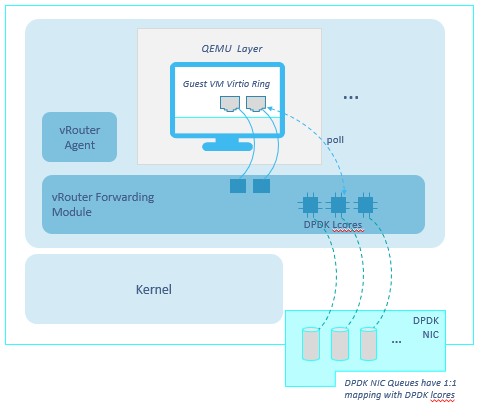

From a very high level, this a dpdk enabled compute node 🙂

Using DPDK requires us to know the “internals” of our server.

Modern servers have multiple CPUs (cores), spread across 2 sockets. We talk about NUMA nodes: node0 and node1.

Install “pciutils” and start having a look at the server NUMA topology:

[root@server-5d ~]# lscpu | grep NUMA NUMA node(s): 2 NUMA node0 CPU(s): 0-13,28-41 NUMA node1 CPU(s): 14-27,42-55

We have 2 NUMA nodes.

Server has 28 physical cores in total.

Those cores are “hyperthreaded”, meaning that each physical core looks like 2 cores. We say there are 28 physical cores but 56 logical cores (vcpus).

Each vcpu has a sibling. For example, 0/28 are sibling, 1/29 are siblings and so on.

NUMA topology can also be seen by using numactl (must be installed):

[root@server-5d ~]# numactl --hardware | grep cpus node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 28 29 30 31 32 33 34 35 36 37 38 39 40 41 node 1 cpus: 14 15 16 17 18 19 20 21 22 23 24 25 26 27 42 43 44 45 46 47 48 49 50 51 52 53 54 55

NICs are connected to a NUMA node as well.

It is useful to learn this information.

We list server NICs:

[root@server-5d ~]# lspci -nn | grep thern 01:00.0 Ethernet controller [0200]: Intel Corporation I350 Gigabit Network Connection [8086:1521] (rev 01) 01:00.1 Ethernet controller [0200]: Intel Corporation I350 Gigabit Network Connection [8086:1521] (rev 01) 02:00.0 Ethernet controller [0200]: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection [8086:10fb] (rev 01) 02:00.1 Ethernet controller [0200]: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection [8086:10fb] (rev 01) We note down the NIC indexes of the interfaces we will use with DPDK, in this case 02:00.0 and 02:00.1.

We check NUMA node information for those NICs:

[root@server-5d ~]# lspci -vmms 02:00.0 | grep NUMA NUMANode: 0 [root@server-5d ~]# lspci -vmms 02:00.1 | grep NUMA NUMANode: 0

Both NICs are connected to NUMA node0.

Alternatively, we can get the same info via lstopo:

[root@server-5d ~]# lstopo

Machine (256GB total)

NUMANode L#0 (P#0 128GB)

Package L#0 + L3 L#0 (35MB)

...

HostBridge L#0

PCIBridge

PCI 8086:1521

Net L#0 "eth0"

PCI 8086:1521

Net L#1 "eno2"

PCIBridge

2 x { PCI 8086:10fb }

DPDK requires the usage of Huge Pages. Huge Pages, as the name suggests, are big memory pages. This bigger size allows a more efficient memory access as it reduces memory access swaps.

There are two kinds of huge pages: 2M pages and 1G pages.

Contrail works with both (if Ansible deployer is used, please use 2M huge pages)

The number of Huge Pages we have to configure may vary depending on our needs. We might choose it based on the expected number of VMs we are going to run on those compute nodes. Let’s say that, realistically, we might think of dedicating some servers to DPDK. In that case, the whole server memory can be “converted” into huge pages.

Actually, not the whole memory as you need to leave some for the host OS.

Moreover, if 1GB huge pages are used, then remember to leave some 2M hugepages for the vrouter; 128 should be enough.

This is possible as we can have a mix of 1G and 2M hugepages.

Once Huge Pages are created, we can verify their creation:

[root@server-5d ~]# cat /sys/devices/system/node/node0/hugepages/hugepages-1048576kB/nr_hugepages 0 [root@server-5d ~]# cat /sys/devices/system/node/node0/hugepages/hugepages-2048kB/nr_hugepages 30000 [root@server-5d ~]# cat /sys/devices/system/node/node0/hugepages/hugepages-2048kB/free_hugepages 29439

In this example we only have 2M hugepages, as the number of 1G hugepages is 0.

There are 30000 2M hugepages (60GB) on node0 and 29439 are still available.

Same verification can be done for node1.

Remember, if we configure N hugepages, N/2 will be created on NUMA node0 and N/2 on NUMA node1.

Each installer has its own specific way to configure huge pages. With Contrail ansible deployer, that is specified when defining the dpdk compute node within the instances.yaml file:

compute_dpdk_1:

provider: bms

ip: 172.30.200.46

roles:

vrouter:

AGENT_MODE: dpdk

HUGE_PAGES: 120

openstack_compute:

Here, I highlighted only the huge pages related settings.

Inside a server, things happen fast but a slight delay can mean performance degradation. We said modern servers have cores spread across different NUMA nodes. Memory is on both nodes as well.

NUMA nodes are connected through a high speed connection, called QPI. Even if QPI is at high speed, going through it can lead to worse performance. Why?

This happens when, for example, a process running on node0 has to access memory located on node1. In this case, the process will query the local RAM controller on node0 and from there it will be re-directed, through the QPI path, towards node1 RAM controller. This higher-hops paths means a higher number of interrupts and cpu cycles that increase the total delay of a single operation. This higher delay, in turn, leads to a “slower” VM, hence worse performance.

In order to avoid this, we need to be sure QPI path is crossed as less as possible or, better, never.

This means having vrouter, memory and NICs all connected to the same NUMA Node.

We said huge pages are created on both nodes. We verified our NICs are connected to NUMA 0. As a consequence, we will have to pin vrouter to cores belonging to NUMA 0.

Going further, even VMs should be placed on the same NUMA node. This places an issue: suppose we have N vcpus on our server. We pin vrouter to numa 0 which means excluding N/2 vcpus belonging to numa1. On Numa0 we allocate X vcpus to vrouter and Y to host OS. This leaves N/2-X-Y vcpus available to VMs. Even if ideal, this is not a practical choice as it means that you need 2 servers to have the same number of vcpus. For this reason, we usually end up creating VMs on both numa nodes knowing that we might pay something in terms of performance.

Assigning DPDK vrouter to specific server cores requires the definition of a so-called coremask.

Let’s recall NUMA topology:

NUMA node0 CPU(s): 0-13,28-41 NUMA node1 CPU(s): 14-27,42-55

We have to pin vrouter cores to NUMA 0 as explained before.

We have to choose between these cpus:

0 1 2 3 4 5 6 7 8 9 10 11 12 13 28 29 30 31 32 33 34 35 36 37 38 39 40 41

We decide to assign 4 physical cores (8 vcpus) to vrouter.

Core 0 should be avoided and left to host OS.

Siblings pairs should be picked.

As a result, we pin our vrouter to 4, 5, 6, 7, 32, 33, 34, 35.

Next, we write cpu numbers from the highest assigned vcpu to 0 and write a 1 for each assigned core:

35 34 33 32 31 30 29 28 27 26 25 24 23 22 21 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 0 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 0 0 0 0

Finally, we read that long binary string and convert into hexadecimal.

We obtain, 0xf000000f0.

This is our coremask!

With Ansible deployer, core mask is deployed as follows:

compute_dpdk_1:

provider: bms

ip: 172.30.200.46

roles:

vrouter:

AGENT_MODE: dpdk

CPU_CORE_MASK: "0xf000000f0"

openstack_compute:

Be sure core mask is enclosed in quotes otherwise the string will be interpreted as a hex number, converted to decimal and this will lead to wrong pinning!

After vrouter has been provisioned, we can check everything was done properly.

DPDK process is running with PID 3732 (output split in multiple lines for simplicity)

root 3732 2670 99 15:35 ? 17:40:59 /usr/bin/contrail-vrouter-dpdk --no-daemon --socket-mem 1024 1024 --vlan_tci 200 --vlan_fwd_intf_name bond1 --vdev eth_bond_bond1,mode=4,xmit_policy=l23,socket_id=0,mac=0c:c4:7a:59:56:40,lacp_rate=1,slave=0000:02:00.0,slave=0000:02:00.1

We get the PID tree:

[root@server-5d ~]# pstree -p $(ps -ef | awk '$8=="/usr/bin/contrail-vrouter-dpdk" {print $2}')

contrail-vroute(3732)─┬─{contrail-vroute}(3894)

├─{contrail-vroute}(3895)

├─{contrail-vroute}(3896)

├─{contrail-vroute}(3897)

├─{contrail-vroute}(3898)

├─{contrail-vroute}(3899)

├─{contrail-vroute}(3900)

├─{contrail-vroute}(3901)

├─{contrail-vroute}(3902)

├─{contrail-vroute}(3903)

├─{contrail-vroute}(3904)

├─{contrail-vroute}(3905)

└─{contrail-vroute}(3906)

And the assigned cores for each PID:

[root@server-5d ~]# ps -mo pid,tid,comm,psr,pcpu -p $(ps -ef | awk '$8=="/usr/bin/contrail-vrouter-dpdk" {print $2}')

PID TID COMMAND PSR %CPU

3732 - contrail-vroute - 802

- 3732 - 13 4.4

- 3894 - 1 0.0

- 3895 - 11 1.8

- 3896 - 9 0.0

- 3897 - 18 0.0

- 3898 - 22 0.2

- 3899 - 4 99.9

- 3900 - 5 99.9

- 3901 - 6 99.9

- 3902 - 7 99.9

- 3903 - 32 99.9

- 3904 - 33 99.9

- 3905 - 34 99.9

- 3906 - 35 99.9

As you can see, the last 8 processes are assigned to the cores we specified within the coremask. This confirms us vrouter was provisioned correctly.

Those 8 vcpus are running at 99.9% CPU. This is normal as DPDK forwarding cores constantly poll the NIC queues to see if there are packets to tx/rx. This leads to CPU being always around 100%.

But what do those cores represent?

Contrail vRouter cores have a specific meaning defined in the following C enum data structure:

enum {

VR_DPDK_KNITAP_LCORE_ID = 0,

VR_DPDK_TIMER_LCORE_ID,

VR_DPDK_UVHOST_LCORE_ID,

VR_DPDK_IO_LCORE_ID, = 3

VR_DPDK_IO_LCORE_ID2,

VR_DPDK_IO_LCORE_ID3,

VR_DPDK_IO_LCORE_ID4,

VR_DPDK_LAST_IO_LCORE_ID, # 7

VR_DPDK_PACKET_LCORE_ID, # 8

VR_DPDK_NETLINK_LCORE_ID,

VR_DPDK_FWD_LCORE_ID, # 10

};

We can find those names by running using the “ps” command with some additional arguments:

[root@server-5b ~]# ps -T -p 54490 PID SPID TTY TIME CMD 54490 54490 ? 02:46:12 contrail-vroute 54490 54611 ? 00:02:33 eal-intr-thread 54490 54612 ? 01:35:26 lcore-slave-1 54490 54613 ? 00:00:00 lcore-slave-2 54490 54614 ? 00:00:17 lcore-slave-8 54490 54615 ? 00:02:14 lcore-slave-9 54490 54616 ? 2-21:44:06 lcore-slave-10 54490 54617 ? 2-21:44:06 lcore-slave-11 54490 54618 ? 2-21:44:06 lcore-slave-12 54490 54619 ? 2-21:44:06 lcore-slave-13 54490 54620 ? 2-21:44:06 lcore-slave-14 54490 54621 ? 2-21:44:06 lcore-slave-15 54490 54622 ? 2-21:44:06 lcore-slave-16 54490 54623 ? 2-21:44:06 lcore-slave-17 54490 54990 ? 00:00:00 lcore-slave-9

– Contrail-vroute is main thread

– lcore-slave-1 is timer thread

– lcore-slave-2 is uvhost (for qemu) thread

– lcore-slave-8 is pkt0 thread

– lcore-slave-9 is netlink thread (for nh/rt programming)

– lcore-slave-10 onwards are forwarding threads, th eons running at 100% as they are constantly polling the interfaces

Since Contrail 5.0, Contrail is containerized, meaning services are hosted inside contaners.

vRouter configuration parameters can be seen inside the vrouter agent:

(vrouter-agent)[root@server-5d /]$ cat /etc/contrail/contrail-vrouter-agent.conf [DEFAULT] platform=dpdk physical_interface_mac=0c:c4:7a:59:56:40 physical_interface_address=0000:00:00.0 physical_uio_driver=uio_pci_generic

There we find configuration values like vrouter mode, dpdk in this case, or the uio driver.

Moreover, we have the MAC address of the vhost0 interface.

In this setup the vhost0 did sit on a bond interface, meaning that MAC is the bond MAC address.

Last, a “0” ID is given as interface address.

Let’s get back for a moment to the vrouter dpdk process we can spot using “ps”:

root 44541 44279 99 12:03 ? 18:52:02 /usr/bin/contrail-vrouter-dpdk --no-daemon --socket-mem 1024 1024 --vlan_tci 200 --vlan_fwd_intf_name bond1 --vdev eth_bond_bond1,mode=4,xmit_policy=l23,socket_id=0,mac=0c:c4:7a:59:56:40,lacp_rate=1,slave=0000:02:00.0,slave=0000:02:00.1

– Process ID is 44541

– Option socket-mem tells us that dpdk is using 1GB (1024 MB) on each NUMA node. This happens because, even if, as said, optimal placement only involves numa node0, in real world VMs will be spawned on both nodes

– Vlan 200 is extracted from the physical interface on which vhost0 sits on

In this case vhost0 uses interface vlan0200 (tagged with vlan-id 200) as physical interface which, in turn, sits on the bond

As a consequence, inspecting this process we will see some parameters taken from the vlan interface while other from the bond

– Forward interface is bond1

– Bond is configured with mode 4 (recommended) and hash policy “l23” (policy “l34” is recommended)

– Bond1 is connected to socket 0

– Bond1 MAC address is specified

– LACP is used over the bond

– Bond slaves interfaces addresses re listed

Last, let’s sum up how we can configure a dpdk compute with Ansible deployer:

compute_dpdk_1:

provider: bms

ip: 172.30.200.46

roles:

vrouter:

PHYSICAL_INTERFACE: vlan0200

AGENT_MODE: dpdk

CPU_CORE_MASK: “0xf000000f0”

DPDK_UIO_DRIVER: uio_pci_generic

HUGE_PAGES: 60000

openstack_compute:

We add several parameters:

• uio_pci_generic is a generic DPDK driver which works both on Ubuntu and RHEL (Centos)

• agent mode is dpdk, default is kernel

• 60000 huge pages will be created. Right now (may 2019) Ansible deployer only works with 2MB hugepages. This means we are allocating 60000*2M=120 GB

• Coremask tells how many cores must be used for the vrouter and which cores have to be pinned

This should cover the basics about Contrail and DPDK and we should be able to deploy dpdk vrouter and verify everything was built properly

Ciao

IoSonoUmberto

Very helpful. Thank you for sharing this.

LikeLike