Contrail vRouter works by default in flow mode (check here for more details).

This means that flows become a scaling factor: too many flows is a problem, both for performance and because flow table is not infinite!

One of the hottest use-cases service providers are working on is without any doubt the virtualization of the mobile core. This implies bringing mobile subscriber sessions into the datacenter and, as a consequence, having those sessions flowing through the vRouter. That is a fairly high number of sessions and we have just said it is important to control them.

Contrail can help us here by introducing a well-known concept: aggregation. Think of a single subscriber: it will open many sessions (Facebook, Youtube, Whatsapp, Telegram, Twitter, Tinder, etc…) and, from a DC perspective, they will all follow the same path inside the mobile chain. Considering this, why do we need to keep each user session on its own. We might aggregate all the sessions of a single user into a single “super-session”, in Contrail terms, a Fat flow!

Ten sessions might become one; this means reducing the number of flows on the vRouter by a factor of 10…huge gain!

Fat flows have been here since the early releases (Contrail 2) but, in recent versions, there have been few enhancements (and new ones are expected).

In this post I’m going to show how to configure fat flows and how they work.

I will not focus on the mobile subscriber use-case (one fat session per subscriber) only but I will describe all the possible combinations we have!

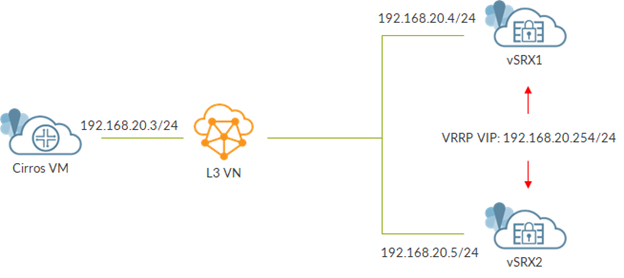

We use a very simple topology:

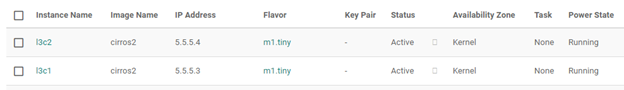

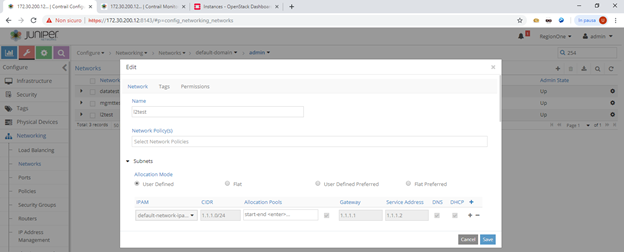

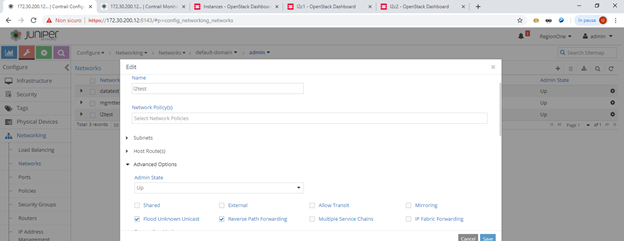

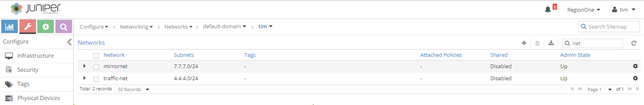

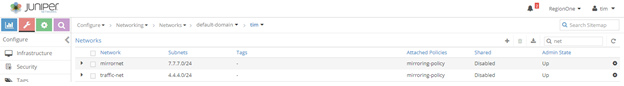

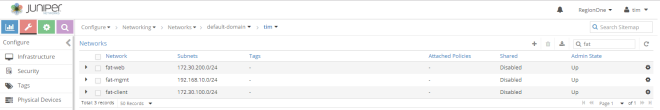

We created 3 virtual networks (L3 standard virtual networks) as we can see here:

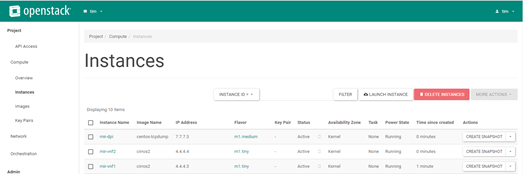

We have one vSRX emulating a set of smartphones (mobile subscribers), a second vSRX emulating the mobile chain and a third vSRX acting as the internet (a loopback with address 8.8.8.8 is configured on this VM).

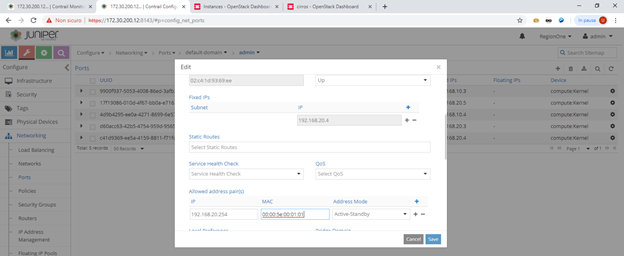

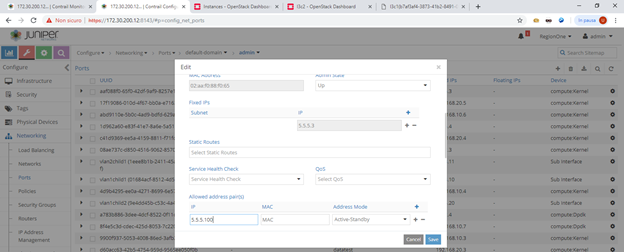

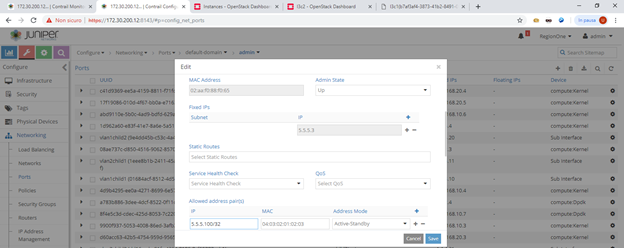

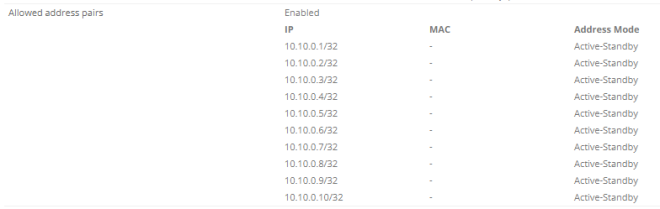

Mobile subscribers are emulated on the first vSRX by configuring a loopback address with multiple addresses. In order to make them usable and to let Contrail know about those IPs we need to configure all of them as allowed address pairs on the corresponding VMI.

As you can see we have 10 potential subscribers.

From the first vSRX we will create sessions towards the emulated internet (8.8.8.8) by running:

ssh google@8.8.8.8 source 10.10.0.1 telnet 8.8.8.8 source 10.10.0.1

Alternatively we can use different source addresses (10.10.0.1 up to 10.10.0.10).

Before jumping into fat flows, connect to the compute node where smartphone VM is running. There run “docker ps” and get the ID of the vrouter agent container. Next, access it by running “docker exec -it bash”. Inside the container use the vif utility to locate vSRX smartphone interface on the fat-client VN (the one on which we will configure fat flows). By default, it looks like this:

Type:Virtual HWaddr:00:00:5e:00:01:00 IPaddr:172.30.100.3

Vrf:8 Mcast Vrf:8 Flags:PL3L2DEr QOS:-1 Ref:6

RX packets:3178 bytes:286450 errors:0

TX packets:15559 bytes:799563 errors:0

Drops:12

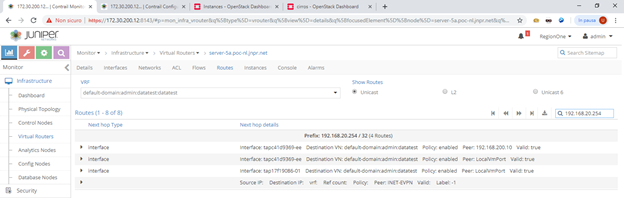

That VMI belongs to a virtual network mapped, on the vRouter, to Vrf 8.

Now, without fat flows we open both a ssh and a telnet connection from the same source 10.10.0.1.

Then, from within vrouter agent container we use “flow –match 10.10.0.1 & vrf 8” to see flow information.

We get this:

Listing flows matching ([10.10.0.1]:*, VRF 8)

Index Source:Port/Destination:Port Proto(V)

-----------------------------------------------------------------------------------

131252202092 8.8.8.8:22 6 (8)

10.10.0.1:64165

(Gen: 1, K(nh):122, Action:F, Flags:, TCP:SSrEEr, QOS:-1, S(nh):20, Stats:12/2765,

SPort 56468, TTL 0, Sinfo 192.168.200.12)

173368398624 10.10.0.1:52986 6 (8)

8.8.8.8:23

(Gen: 1, K(nh):122, Action:F, Flags:, TCP:SSrEEr, QOS:-1, S(nh):122, Stats:10/813,

SPort 53068, TTL 0, Sinfo 16.0.0.0)

202092131252 10.10.0.1:64165 6 (8)

8.8.8.8:22

(Gen: 1, K(nh):122, Action:F, Flags:, TCP:SSrEEr, QOS:-1, S(nh):122, Stats:13/3119,

SPort 58539, TTL 0, Sinfo 16.0.0.0)

398624173368 8.8.8.8:23 6 (8)

10.10.0.1:52986

(Gen: 1, K(nh):122, Action:F, Flags:, TCP:SSrEEr, QOS:-1, S(nh):20, Stats:8/583,

SPort 61392, TTL 0, Sinfo 192.168.200.12)

We have 4 flows as expected. Remember each flow is unidirectional so for a ssh session we have 2 flows (same for telnet or any other session).

As soon as we close ssh/telnet sessions, they disappear.

In this case, of course, there is no aggregation here. Let’s use this as a baseline.

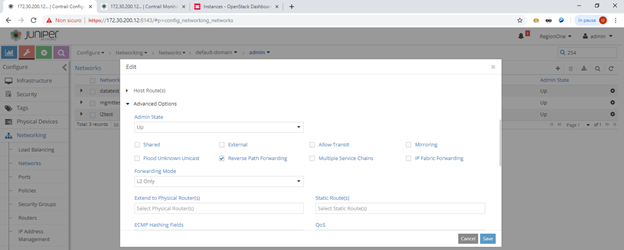

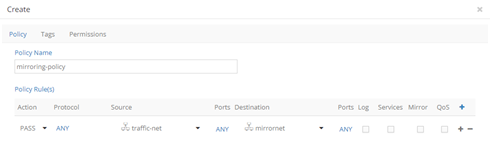

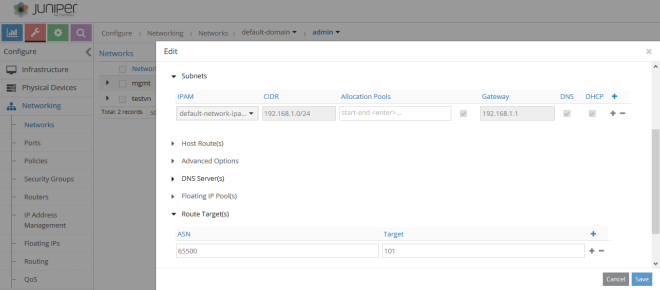

We configure fat flows on a per-virtual-network basis. In this case, we will configure fat flows on the fat-client VN but we might do the same on fat-web.

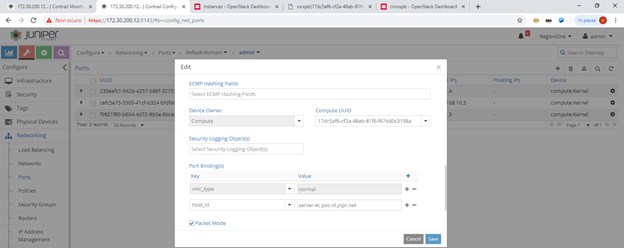

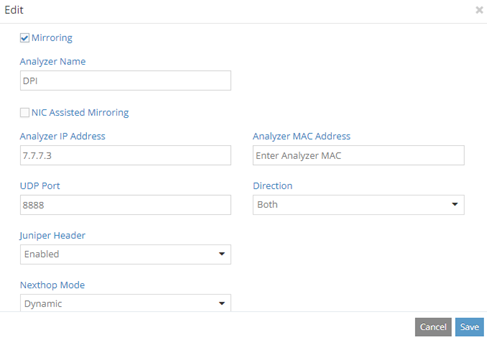

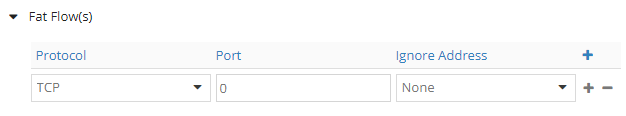

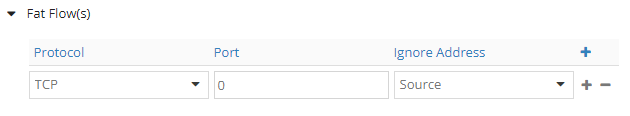

We edit the virtual network and add a fat flow:

This is how fat flows configuration looks like.

We can choose the protocol (TCP, UDP, ICMP, SCTP).

We can choose the port; this is a destination port used to group flows. For example, if set to 80, all flows towards port 80 are grouped. Port 0 means any.

Setting Ignore Address tells Contrail to ignore Source or Destination addresses (or none of them) when grouping flows.

Now we will, see all the use-cases one by one.

Let’s start with the one showed in the above image: TCP, PORT 0, NONE.

Let’s see how the vif changed:

vif0/16 OS: tap15c17258-85

Type:Virtual HWaddr:00:00:5e:00:01:00 IPaddr:172.30.100.3

Vrf:8 Mcast Vrf:8 Flags:PL3L2DEr QOS:-1 Ref:6

RX packets:3178 bytes:286450 errors:0

TX packets:15559 bytes:799563 errors:0

Drops:12

FatFlows (Protocol/Port): 6:*

FatFlows IPv4 exclude prefix list:

172.30.100.1

172.30.100.2

169.254.0.0

FatFlows IPv6 exclude prefix list:

fe80::

Fat flows specification appeared. As you can see some addresses are excluded and will not be aggregated. Those are vRouter addresses; this is one enhancements introduced in the last releases.

Moreover, we can see our fat flow configuration: “6:*”. That means protocol TCP (6), any port. Ignore none is implicit as there is nothing written (we will understand this later better).

We open ssh and telnet sessions and check flows:

Listing flows matching ([10.10.0.1]:*, VRF 8)

Index Source:Port/Destination:Port Proto(V)

-----------------------------------------------------------------------------------

125628160496 10.10.0.1:0 6 (8)

8.8.8.8:0

(Gen: 5, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):122, Stats:38/7183,

SPort 63262, TTL 0, Sinfo 16.0.0.0)

160496125628 8.8.8.8:0 6 (8)

10.10.0.1:0

(Gen: 5, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):20, Stats:34/6217,

SPort 62655, TTL 0, Sinfo 192.168.200.12)

This time we only have 2 flows: there was aggregation!

Specifically we have “SRC:* -> DST:*”. This means that all the flows between 10.10.0.1 and 8.8.8.8 are aggregated regardless the service (port).

Both ssh and telnet sessions collapse into this fat flow.

If we close the ssh/telnet sessions, unlike before, the aggregate session does not disappear instantly as it is not bounded to a specific session but many sessions might rely on it. This is a difference compared to standard flows.

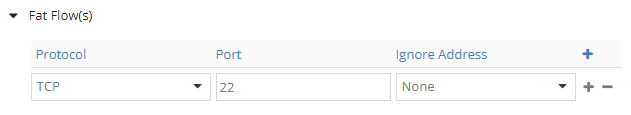

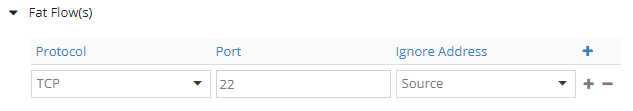

Now we configure fat flows as follows: TCP, PORT 22, NONE.

Let’s look at the vif:

vif0/16 OS: tap15c17258-85

Type:Virtual HWaddr:00:00:5e:00:01:00 IPaddr:172.30.100.3

Vrf:8 Mcast Vrf:8 Flags:PL3L2DEr QOS:-1 Ref:6

RX packets:3211 bytes:288281 errors:0

TX packets:15788 bytes:809607 errors:0

Drops:12

FatFlows (Protocol/Port): 6:22

FatFlows IPv4 exclude prefix list:

172.30.100.1

172.30.100.2

169.254.0.0

FatFlows IPv6 exclude prefix list:

fe80::

Configuration change is reflected: “6:22”.

We open 2 ssh sessions from the same subscriber (use two terminals :))

Listing flows matching ([10.10.0.1]:*, VRF 8)

Index Source:Port/Destination:Port Proto(V)

-----------------------------------------------------------------------------------

377700450224 10.10.0.1:0 6 (8)

8.8.8.8:22

(Gen: 2, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):122, Stats:26/6238,

SPort 61282, TTL 0, Sinfo 16.0.0.0)

450224377700 8.8.8.8:22 6 (8)

10.10.0.1:0

(Gen: 2, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):20, Stats:24/5530,

SPort 56413, TTL 0, Sinfo 192.168.200.12)

We see a fat flow of this type: “SRC:* -> DST:22”.

All SSH sessions from 10.10.0.1 to 8.8.8.8 are merged into this fat flow.

As a consequence, if we open a telnet session, that will not be part of the fat flow:

Listing flows matching ([10.10.0.1]:*, VRF 8)

Index Source:Port/Destination:Port Proto(V)

-----------------------------------------------------------------------------------

143264435120 10.10.0.1:64228 6 (8)

8.8.8.8:23

(Gen: 1, K(nh):122, Action:F, Flags:, TCP:SSrEEr, QOS:-1, S(nh):122, Stats:11/879,

SPort 53222, TTL 0, Sinfo 16.0.0.0)

377700450224 10.10.0.1:0 6 (8)

8.8.8.8:22

(Gen: 2, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):122, Stats:28/6370,

SPort 61282, TTL 0, Sinfo 16.0.0.0)

435120143264 8.8.8.8:23 6 (8)

10.10.0.1:64228

(Gen: 1, K(nh):122, Action:F, Flags:, TCP:SSrEEr, QOS:-1, S(nh):20, Stats:9/635,

SPort 59946, TTL 0, Sinfo 192.168.200.12)

450224377700 8.8.8.8:22 6 (8)

10.10.0.1:0

(Gen: 2, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):20, Stats:26/5634,

SPort 56413, TTL 0, Sinfo 192.168.200.12)

As you can see, the telnet session gets its personal two flows.

Summing up, with ignore “none” both source and destination addresses matter. We might merge all sessions between a src-dst pair (port 0) or all sessions for a given service (port X).

Let’s move to ignore source.

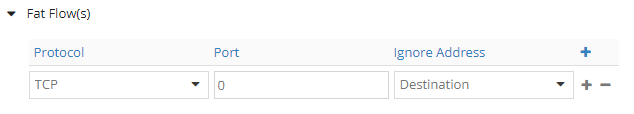

We start with: TCP, PORT 0, SOURCE.

Check vif:

vif0/16 OS: tap15c17258-85

Type:Virtual HWaddr:00:00:5e:00:01:00 IPaddr:172.30.100.3

Vrf:8 Mcast Vrf:8 Flags:PL3L2DEr QOS:-1 Ref:6

RX packets:3223 bytes:288919 errors:0

TX packets:15878 bytes:813521 errors:0

Drops:12

FatFlows (Protocol/Port): 6:* - Sip

FatFlows IPv4 exclude prefix list:

172.30.100.1

172.30.100.2

169.254.0.0

FatFlows IPv6 exclude prefix list:

fe80::

Now we see the ignore setting: “6:* – Sip”. String “Sip” means source IP, the ignore setting we configured.

We open ssh and telnet sessions:

Listing flows matching ([8.8.8.8]:*, VRF 8)

Index Source:Port/Destination:Port Proto(V)

-----------------------------------------------------------------------------------

67544375180 8.8.8.8:0 6 (8)

0.0.0.0:0

(Gen: 6, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):20, Stats:24/5530,

SPort 53664, TTL 0, Sinfo 192.168.200.12)

37518067544 0.0.0.0:0 6 (8)

8.8.8.8:0

(Gen: 6, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):122, Stats:26/6238,

SPort 61852, TTL 0, Sinfo 16.0.0.0)

This time we have: “*:* -> DST:*”.

Here we group all flows towards 8.8.8.8 regardless the source address and the destination port. This means that any TCP connection towards 8.8.8.8 will be part of this fat flows. Even if we open a new ssh or telnet session towards 8.8.8.8 with source 10.10.0.2-10, that traffic will end up in that fat flow.

Let’s set a port: TCP, PORT 22, SOURCE

Check vif:

vif0/16 OS: tap15c17258-85

Type:Virtual HWaddr:00:00:5e:00:01:00 IPaddr:172.30.100.3

Vrf:8 Mcast Vrf:8 Flags:PL3L2DEr QOS:-1 Ref:6

RX packets:3233 bytes:289473 errors:0

TX packets:15942 bytes:816343 errors:0

Drops:12

FatFlows (Protocol/Port): 6:22 - Sip

FatFlows IPv4 exclude prefix list:

172.30.100.1

172.30.100.2

169.254.0.0

FatFlows IPv6 exclude prefix list:

fe80::

There are no more secrets here. Port 22 appeared.

Here, we open two ssh sessions towards 8.8.8.8: one from 10.10.0.1 and one from 10.10.0.2.

Listing flows matching ([8.8.8.8]:*, VRF 8)

Index Source:Port/Destination:Port Proto(V)

-----------------------------------------------------------------------------------

64612383724 0.0.0.0:0 6 (8)

8.8.8.8:22

(Gen: 3, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):122, Stats:26/6238,

SPort 64951, TTL 0, Sinfo 16.0.0.0)

38372464612 8.8.8.8:22 6 (8)

0.0.0.0:0

(Gen: 3, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):20, Stats:24/5530,

SPort 62248, TTL 0, Sinfo 192.168.200.12)

Both flows become part of this fat flows: “*:* -> DST:22”.

Any TCP session, regardless the source, towards 8.8.8.8 port 22 will go into this fat flow.

We open a telnet session:

Listing flows matching ([8.8.8.8]:*, VRF 8)

Index Source:Port/Destination:Port Proto(V)

-----------------------------------------------------------------------------------

64612383724 0.0.0.0:0 6 (8)

8.8.8.8:22

(Gen: 3, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):122, Stats:30/6502,

SPort 64951, TTL 0, Sinfo 16.0.0.0)

368928388412 8.8.8.8:23 6 (8)

10.10.0.1:55216

(Gen: 1, K(nh):122, Action:F, Flags:, TCP:SSrEEr, QOS:-1, S(nh):20, Stats:8/583,

SPort 53194, TTL 0, Sinfo 192.168.200.12)

38372464612 8.8.8.8:22 6 (8)

0.0.0.0:0

(Gen: 3, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):20, Stats:28/5738,

SPort 62248, TTL 0, Sinfo 192.168.200.12)

388412368928 10.10.0.1:55216 6 (8)

8.8.8.8:23

(Gen: 1, K(nh):122, Action:F, Flags:, TCP:SSrEEr, QOS:-1, S(nh):122, Stats:10/813,

SPort 61171, TTL 0, Sinfo 16.0.0.0)

Telnet session is not in the fat flow as it does not use port 22.

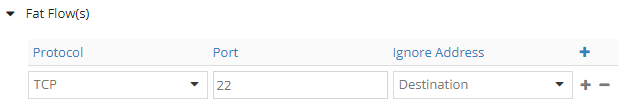

Let’s move to the last use-cases: ignore destination.

At first TCP, PORT 0, DEST:

Check vif:

vif0/16 OS: tap15c17258-85

Type:Virtual HWaddr:00:00:5e:00:01:00 IPaddr:172.30.100.3

Vrf:8 Mcast Vrf:8 Flags:PL3L2DEr QOS:-1 Ref:6

RX packets:3241 bytes:289876 errors:0

TX packets:16004 bytes:819014 errors:0

Drops:12

FatFlows (Protocol/Port): 6:* - Dip

FatFlows IPv4 exclude prefix list:

172.30.100.1

172.30.100.2

169.254.0.0

FatFlows IPv6 exclude prefix list:

fe80::

We easily spot what changed: Dip because we now ignore destination address.

We open ssh and telnet sessions from 10.10.0.1:

Listing flows matching ([10.10.0.1]:*, VRF 8)

Index Source:Port/Destination:Port Proto(V)

-----------------------------------------------------------------------------------

402332448524 0.0.0.0:0 6 (8)

10.10.0.1:0

(Gen: 6, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):20, Stats:2/104,

SPort 55089, TTL 0, Sinfo 192.168.200.12)

448524402332 10.10.0.1:0 6 (8)

0.0.0.0:0

(Gen: 6, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):122, Stats:2/132,

SPort 50011, TTL 0, Sinfo 16.0.0.0)

This time the fat flow look like “SRC:* -> *:*”.

Any session from that subscriber, regardless the destination and service, will be merged into this single fat flow. This is the mobile use-case we initially mentioned: a single fat flow per single mobile subscriber.

Finally, we set a port: TCP, PORT 22, DEST

Check vif for the last time:

vif0/16 OS: tap15c17258-85

Type:Virtual HWaddr:00:00:5e:00:01:00 IPaddr:172.30.100.3

Vrf:8 Mcast Vrf:8 Flags:PL3L2DEr QOS:-1 Ref:6

RX packets:3252 bytes:290472 errors:0

TX packets:16083 bytes:822466 errors:0

Drops:12

FatFlows (Protocol/Port): 6:22 - Dip

FatFlows IPv4 exclude prefix list:

172.30.100.1

172.30.100.2

169.254.0.0

FatFlows IPv6 exclude prefix list:

fe80::

No comments here: we know everything.

We open two ssh sessions from 10.10.0.1:

Listing flows matching ([10.10.0.1]:*, VRF 8)

Index Source:Port/Destination:Port Proto(V)

-----------------------------------------------------------------------------------

166544339920 10.10.0.1:0 6 (8)

0.0.0.0:22

(Gen: 2, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):122, Stats:13/3119,

SPort 58238, TTL 0, Sinfo 16.0.0.0)

339920166544 0.0.0.0:22 6 (8)

10.10.0.1:0

(Gen: 2, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):20, Stats:12/2765,

SPort 58239, TTL 0, Sinfo 192.168.200.12)

As a result, one single “ssh fat flow”. All the ssh sessions from a single subscriber will be merged as a single flow.

A flow like “SRC:* -> *:22”.

This means that a telnet session will not match that fat flow:

Listing flows matching ([10.10.0.1]:*, VRF 8)

Index Source:Port/Destination:Port Proto(V)

-----------------------------------------------------------------------------------

153448487796 8.8.8.8:23 6 (8)

10.10.0.1:62123

(Gen: 1, K(nh):122, Action:F, Flags:, TCP:SSrEEr, QOS:-1, S(nh):20, Stats:8/583,

SPort 52129, TTL 0, Sinfo 192.168.200.12)

166544339920 10.10.0.1:0 6 (8)

0.0.0.0:22

(Gen: 2, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):122, Stats:13/3119,

SPort 58238, TTL 0, Sinfo 16.0.0.0)

339920166544 0.0.0.0:22 6 (8)

10.10.0.1:0

(Gen: 2, K(nh):122, Action:F, Flags:, TCP:, QOS:-1, S(nh):20, Stats:12/2765,

SPort 58239, TTL 0, Sinfo 192.168.200.12)

487796153448 10.10.0.1:62123 6 (8)

8.8.8.8:23

(Gen: 1, K(nh):122, Action:F, Flags:, TCP:SSrEEr, QOS:-1, S(nh):122, Stats:10/813,

SPort 63498, TTL 0, Sinfo 16.0.0.0)

This covers all the currently supported use-cases.

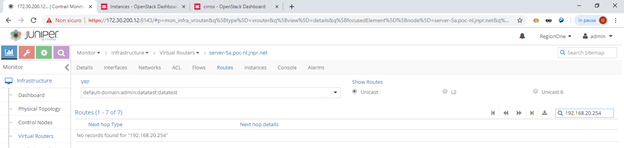

What about the future? More aggregation. As of now, the only possible source-based aggregation was obtained by setting “ignore source”. This led to fat flows grouping flows from any source towards a specific destination address (and port if set).

This is do-able but, looking at reality, not interesting.

Suppose your mobile subscribers are assigned addresses from pool 10.10.0.0/16. It might be useful to aggregate those flows by source and doing that by splitting the /16 subnet into smaller subnets, for example many /24. This kind of aggregation will be possible in future releases (probably 5.1) so stay tuned!

That is all for today

Ciao

IoSonoUmberto